Neuer Artikel bei Privacy in Machine Learning (NeurIPS 2021 Workshop) angenommen

(02.12.2012) Der Artikel "Architecture Matters: Investigating the Influence of Differential Privacy on Neural Network Design" von Felix Morsbach, Tobias Dehling und Ali Sunyaev wurde bei Privacy in Machine Learning (NeurIPS 2021 Workshop) angenommen und wird dort am 14.12.2021 von Felix Morsbach vorgestellt.

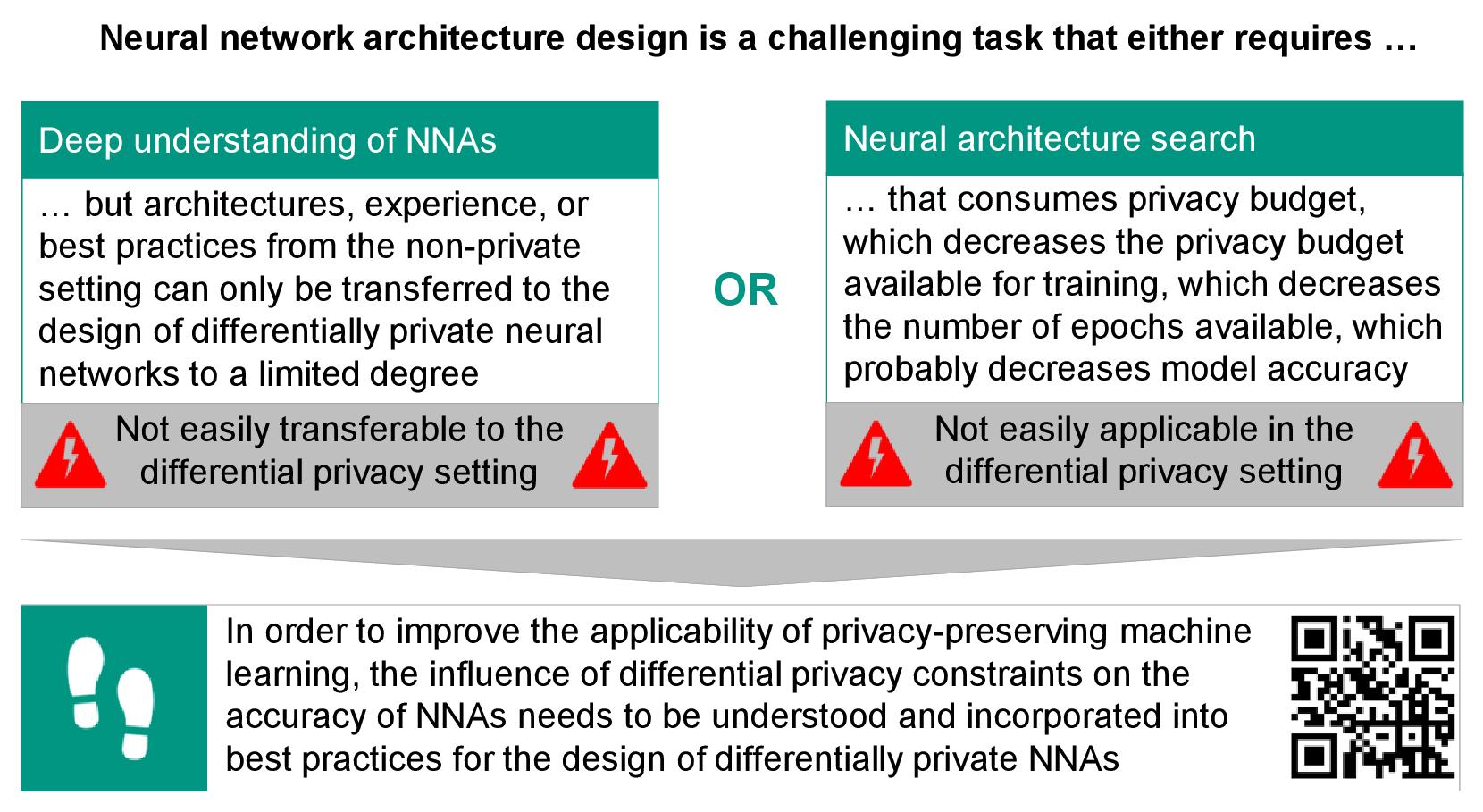

Abstract: One barrier to more widespread adoption of differentially private neural networks is the entailed accuracy loss. To address this issue, the relationship between neural network architectures and model accuracy under differential privacy constraints needs to be better understood. As a first step, we test whether extant knowledge on architecture design also holds in the differentially private setting. Our findings show that it does not; architectures that perform well without differential privacy, do not necessarily do so with differential privacy. Consequently, extant knowledge on neural network architecture design cannot be seamlessly translated into the differential privacy context. Future research is required to better understand the relationship between neural network architectures and model accuracy to enable better architecture design choices under differential privacy constraints.

Link (Artikel): https://arxiv.org/abs/2111.14924

Link (Workshop): https://priml2021.github.io/