Two Articles accepted at CHI 2024

(12.03.2024) The critical information infrastructure research group got two papers accepted at the ACM CHI Conference on Human Factors in Computing Systems. First, Florian Leiser collaborated with Sven Eckhardt, Valentin Leuthe, Merlin Knaeble, Alexander Maedche, Gerhard Schwabe, and Ali Sunyaev to develop HILL – A Hallucination Identifier for Large Language Models. Second, Jeanine Kirchner-Krath led a collaboration with Manuel Schmidt-Kraepelin, Sofia Schöbel, Mathias Ulrich, Ali Sunyaev and Harald F. O. von Korflesch investigating gamification efforts to overcome procrastination in academia.

Both abstracts are provided below:

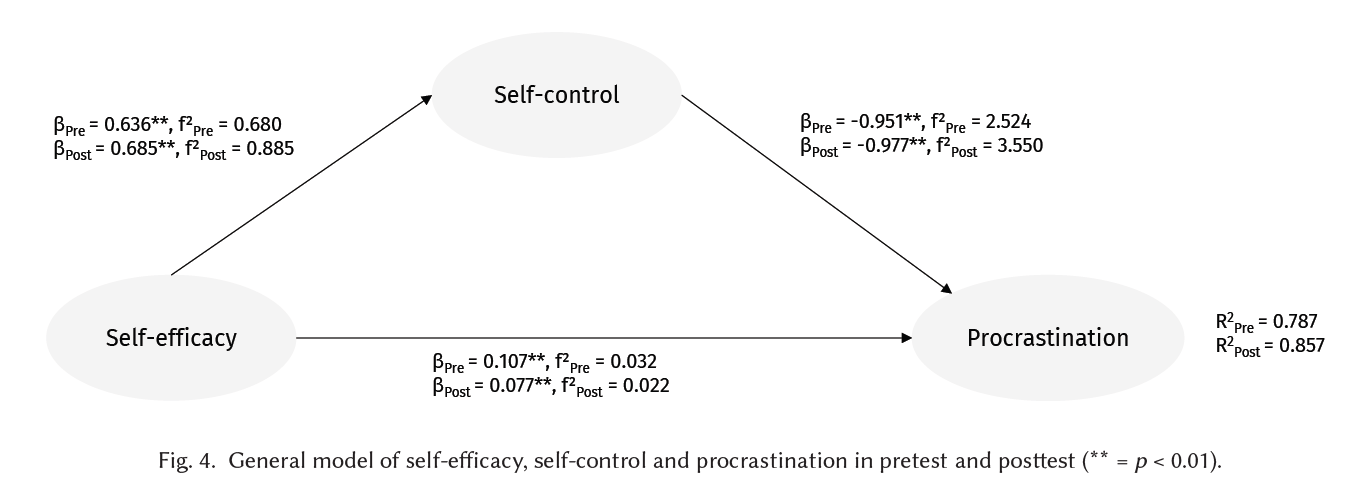

Outplay Your Weaker Self: A Mixed-Methods Study on Gamification to Overcome Procrastination in Academia

Jeanine Kirchner-Krath, Manuel Schmidt-Kraepelin, Sofia Schöbel, Mathias Ulrich, Ali Sunyaev, Harald F. O. von Korflesch

Abstract: Procrastination is the deliberate postponing of tasks knowing that it will have negative consequences in the future. Despite the potentially serious impact on mental and physical health, research has just started to explore the potential of information systems to help students combat procrastination. Specifically, while existing learning systems increasingly employ elements of game design to transform learning into an enjoyable and purposeful adventure, little is known about the effects of gameful approaches to overcome procrastination in academic settings. This study advances knowledge on gamification to counter procrastination by conducting a mixed-methods study among higher education students. Our results shed light on usage patterns and outcomes of gamification on self-efficacy, self-control, and procrastination behaviors. The findings contribute to theory by providing a better understanding of the potential of gamification to tackle procrastination. Practitioners are supported by implications on how to design gamified learning systems to support learners in self-organized work.

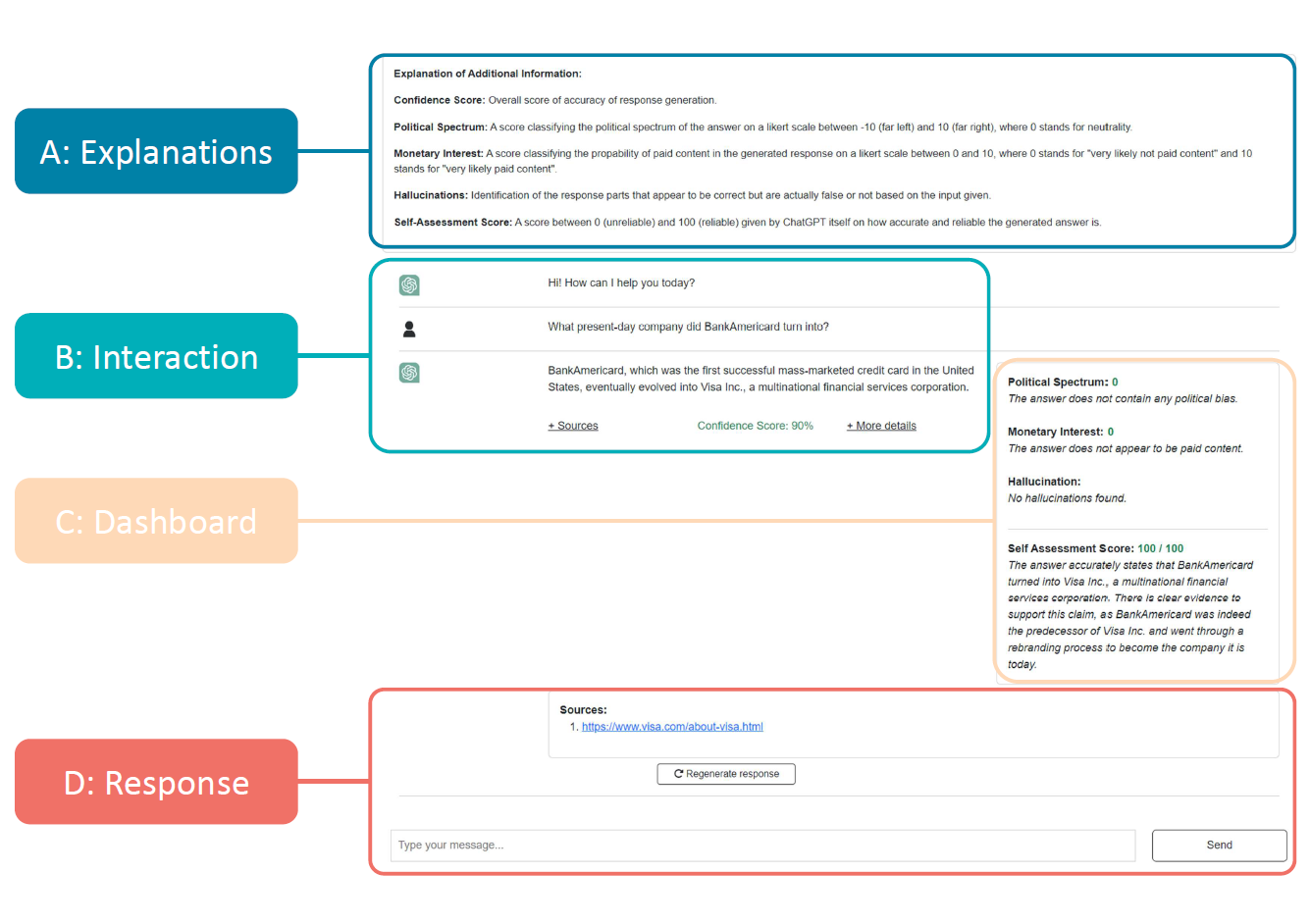

HILL: A Hallucination Identifier for Large Language Models

Florian Leiser, Sven Eckhardt, Valentin Leuthe, Merlin Knaeble, Alexander Maedche, Gerhard Schwabe, Ali Sunyaev

Abstract: Large language models (LLMs) are prone to hallucinations, i.e., nonsensical, unfaithful, and undesirable text. Users tend to overrely on LLMs and corresponding hallucinations which can lead to misinterpretations and errors. To tackle the problem of overreliance, we propose HILL, the "Hallucination Identifier for Large Language Models". First, we identified design features for HILL with a Wizard of Oz approach with nine participants. Subsequently, we implemented HILL based on the identified design features and evaluated HILL's interface design by surveying 17 participants. Further, we investigated HILL's functionality to identify hallucinations based on an existing question-answering dataset and five user interviews. We find that HILL can correctly identify and highlight hallucinations in LLM responses which enables users to handle LLM responses with more caution. With that, we propose an easy-to-implement adaptation to existing LLMs and demonstrate the relevance of user-centered designs of AI artifacts.

The article is available at: https://arxiv.org/abs/2403.06710.